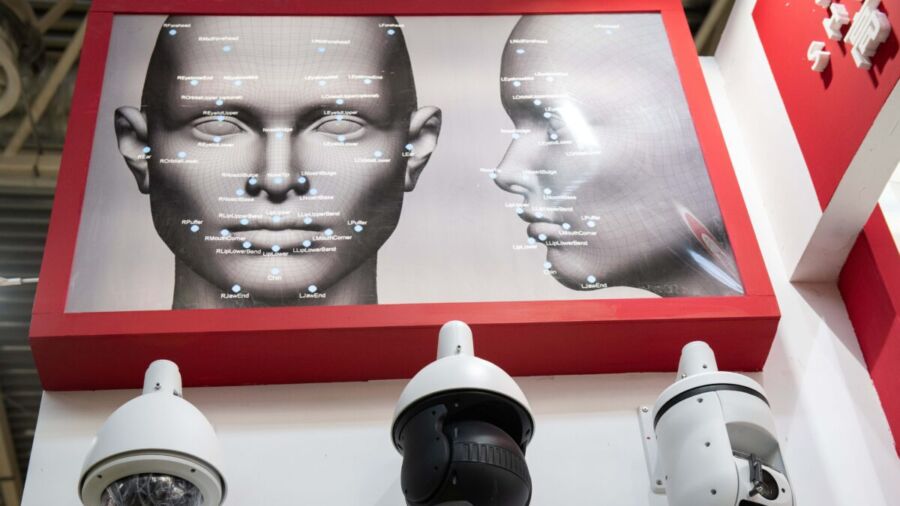

The European Parliament has voted for a complete ban on real-time, remote biometric surveillance—which would make it impossible for police forces across the EU to use live facial recognition technology. The ban had been previously agreed upon by lawmakers after facing initial opposition due to concerns that the ban might be too far-reaching.

The ban may set the lawmakers on a collision course with EU nations that would prefer to roll out the technology intensively for policing purposes.

Additional measures to limit general-purpose artificial intelligence (AI) and so-called foundational models—such as OpenAI’s GPT-4—were also included in the vote. The legislation stipulates that companies such as OpenAI Inc. and Google must conduct risk assessments and provide summaries of the copyrighted material used to train their models—regardless of their application.

Speaking at a press conference, Parliament President Roberta Metsola said that any future innovations in artificial intelligence would need to be subject to constant and clearly defined boundaries and limitations.

“There is one thing that we will not compromise on: Anytime technology advances, it must go hand in hand with our fundamental rights and democratic values,” he added.

The entire draft of the AI Act was passed on June 14 by an overwhelming majority. Some 499 delegates voted in favor, while only 28 voted against—with 93 abstaining from the vote. The vote marks the beginning of what is referred to as the “trilogue” stage of negotiations between the parliament members, which will involve the final thrashing out of the bill’s finer details.

According to Bloomberg, the EU Commission is aiming for a deal by the end of the year, meaning the new AI Act regulations could affect businesses as soon as 2026.

However, some rules could be applied even earlier than that, according to Brando Benifei, one of the main authors of the act.

Meanwhile, EU officials including Executive Vice President Margrethe Vestager are seeking to draw up a voluntary code of conduct with tech companies active across the G7, while also involving nations such as India and Indonesia.

A previous deal agreed to by EU member states at the end of last year proposed that facial scanning in public spaces would be permitted for certain law-enforcement situations—an issue that will continue to serve as a red line for several EU member states in the upcoming negotiations.

Some members of the conservative-leaning People’s Party petitioned to include exceptions to the stipulation, such as in the case of searches for missing children and the prevention of terrorist attacks. However, these points overwhelmingly fell on deaf ears with lawmakers at Wednesday’s sweeping vote.

“The result of today gives us even a stronger position. It’s clear that the parliament doesn’t want us to recede on such important topics, on avoiding mass surveillance,” said Benifei.

Concerns

In its report (pdf), the European Parliamentary Research Service describes how AI technology such as facial recognition could be problematic in terms of personal freedom.

“While there are real benefits to using facial recognition systems for public safety and security, their pervasiveness and intrusiveness, as well as their susceptibility to error, give rise to a number of fundamental rights concerns with regard, for instance, to discrimination against certain segments of the population and violations of the right to data protection and privacy,” the report states.

The AI Act was first proposed in 2021 (pdf), and was initially touted as a risk-based approach that would effectively regulate the application of AI rather than the actual technology itself. The proposal would have banned practices like social credit scoring and the implementation of technologies that would likely have negative impacts in terms of bias, discrimination, and citizens’ fundamental rights.

Instead, however, EU member states drove efforts to include general-purpose AI in the act, which would apply to a much wider array of scenarios.

Members of the European Parliament reportedly went another step further by adding controls to “foundational models.” This relates to the large language models that serve as the foundation for chatbots like ChatGPT, which have been subject to much public and official scrutiny in recent months.

In a statement, Internal Market Commissioner Thierry Breton said he expects clear and proportionate rules on generative AI to be focal points of the trilogue process.

“We need effective transparency requirements on AI-generated content and strict rules against ‘deep fakes,’” he said.

The extent of the new EU regulations could have far-reaching implications in a sector that is currently estimated to be worth close to $1.5 trillion. Companies could potentially face huge fines of up to 6 percent of their annual revenue for non-compliance.